Introduction to PyTorchModel

Overview:

The PyTorchModel class provides different ways of

serializing a trained PyTorch model. The prepared model artifacts

can be saved and deployed.

There are two serialization methods that you can use with the PyTorchModel module in ADS:

Save to

ptformatSave to

onnxformat

Initialize

PyTorchModel

PyTorchModel uses the following required parameters:

estimator: Callable. Any model object generated by the PyTorch framework.artifact_dir: str. Artifact directory to store the files needed for deployment.properties: (ModelProperties, optional). Defaults toNone. TheModelPropertiesobject required to save and deploy model.auth :(Dict, optional). Defaults to None. The default authentication is set using theads.set_authAPI. If you need to override the default, useads.common.auth.api_keysorads.common.auth.resource_principalto create appropriate authentication signer and kwargs required to instantiate theIdentityClientobject.

properties is an instance of ModelProperties and has the following predefined fields:

inference_conda_env: strinference_python_version: strtraining_conda_env: strtraining_python_version: strtraining_resource_id: strtraining_script_path: strtraining_id: strcompartment_id: strproject_id: strdeployment_instance_shape: strdeployment_instance_count: intdeployment_bandwidth_mbps: intdeployment_log_group_id: strdeployment_access_log_id: strdeployment_predict_log_id: str

By default, properties is populated from environment variables if it’s

not specified. For example, in the notebook session the environment variables

for project id and compartment id are preset and stored in PROJECT_OCID and

NB_SESSION_COMPARTMENT_OCID``by default. And ``properties populates these variables

from the environment variables, and uses the values in functions such as .save(), .deploy() by default.

However, if these aren’t the values you want, you can always explicitly pass the variables into functions to overwrite

those values. For the fields that properties has, it records the values that you pass into the functions.

For example, when you pass inference_conda_env into .prepare(), then properties records this value.

Later you can export it using .to_yaml(), and reload it using .from_yaml() from any machine.

This allows you to reuse the properties in different places.

Summary_status

PyTorchModel.summary_status

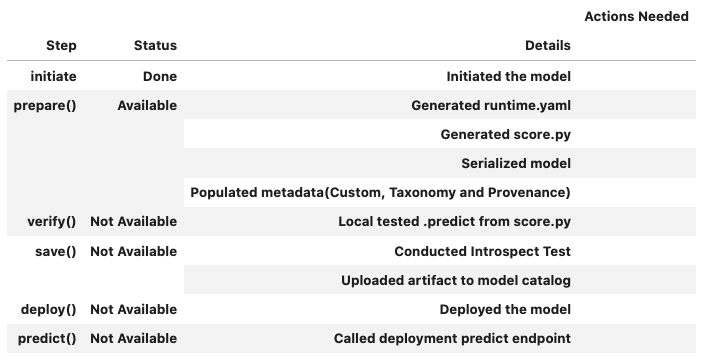

You can call the PyTorchModel.summary_status() method any time after the PyTorchModel instance is created. This applies to other model frameworks, such as SklearnModel and so on. It returns a Pandas dataframe to guide you though the whole workflow. It shows which function is available to call, which ones aren’t, and what each function is doing. If extra actions are required, it can also show those.

An example of a summary status table is similar to the following after you initiate the model instance. The step column shows all the functions and shows that init step is completed where the Details column explained that what init step did. Now prepare() is available. The next step is to call prepare().

Prepare

PyTorchModel.prepare

The PyTorchModel.prepare API serializes model to artifact

directory using the following parameters:

inference_conda_env: str. Defaults toNone. Can be either a slug or an object storage path of the conda environment. You can only pass in slugs if the conda environment is a service environment.as_onnx: (bool, optional). Defaults toFalse. Whether to serialize as an Onnx model or not.model_file_name: (str, optional). Name of the serialized model.force_overwrite: (bool, optional). Defaults toFalse. Whether to overwrite existing files.inference_python_version: (str, optional). Defaults toNone. Python version to use in deployment.training_conda_env: (str, optional). Defaults toNone. Can be either a slug or an object storage path of the conda environment. You can only pass in slugs if the conda pack is a service environment.training_python_version: (str, optional). Defaults toNone. Python version to use during training.namespace: (str, optional). Namespace of the region. Indentifies which region the service environment is from when you pass a slug toinference_conda_env and training_conda_env.use_case_type: (str, optional). The use case type of the model.X_sample: (Union[list, pd.Series, np.ndarray, pd.DataFrame], optional). Defaults toNone. A sample of input data to use to generate the input schema.y_sample: (Union[list, pd.Series, np.ndarray, pd.DataFrame], optional). Defaults toNone. A sample of output data to use to generate the output schema.training_script_path: (str, optional). Defaults toNone. Training script path.training_id: (str, optional). Defaults to value from environment variables. The training OCID for model. Can be a notebook session or job OCID.ignore_pending_changes: (bool, optional). Defaults toFalse. Whether to ignore the pending changes in Git.max_col_num: (int, optional). Defaults to 2000. The maximum column size of the data that allows you to automatically generate the schema.

kwargs includes the following parameters:

onnx_args: (tuple or torch.Tensor, optional). Required when setas_onnx=Truein onnx serialization. Contains model inputs such thatonnx_model(onnx_args)is a valid invocation of the model.input_names: (List[str], optional). Defaults to["input"]. Optional in onnx serialization. Names to assign to the input nodes of the graph, in order.output_names: (List[str], optional). Defaults to["output"]. Optional in onnx serialization. Names to assign to the output nodes of the graph, in order.dynamic_axes: (dict, optional). Defaults toNone. Optional in onnx serialization. Specify axes of tensors as dynamic (i.e. known only at run-time).

It automatically generates the following files in the artifact directory:

runtime.yamlscore.py.model.h5by default whenas_onnx=Falseandmodel_file_namewhen not provided. Ifas_onnx=True, the model is saved asmodel.onnxby default.input_schema.jsonwhenX_sampleis passed in and the schema is more than 32 KB.output_schema.jsonwheny_sampleis passed in and the schema is more than 32 KB.hyperparameters.jsonif extracted hyperparameters is more than 32 KB.

Notes:

The PyTorch framework serialization approach will save only the model parameters. So you would have to update the score.py to construct the model class instance first before loading model parameters in the perdict function of score.py.

You can also update the pre_inference and post_inference methods in the score.py if necessary.

We provide two ways of serializing the models: local method which is supported by PyTorch and onnx method. By default, local method is used and also it is recommended way of serialize the model.

Verify

PyTorchModel.verify

The PyTorchModel.verify API tests whether the predict API will work in the local environment.

It takes one parameter:

data (Union[dict, str, list, np.ndarray, torch.tensor]). Data expected by the predict API inscore.py.

Before saving and deploying the model, we recommend that you call the verify() method

to check if load_model and predict function in score.py works.

It takes and returns the same data as the model deployment predict takes and returns.

Save

PyTorchModel.save

The PyTorchModel.save method saves the model files to the model artifact. It takes the following parameters:

display_name: (str, optional). Defaults toNone. The name of the model.description: (str, optional). Defaults toNone. The description of the model.freeform_tags: Dict(str, str). Defaults toNone. Free form tags for the model.defined_tags: (Dict(str, dict(str, object)), optional). Defaults toNone. Defined tags for the model.ignore_introspection: (bool, optional). Defaults toNone. Determines whether to ignore the result of model introspection or not. If set toTrue, the save ignores all model introspection errors.

kwargs includes the following parameters:

project_id: (str, optional). Project OCID. If not specified, the value is taken from the environment variables or model properties.compartment_id: (str, optional). Compartment OCID. If not specified, the value is taken from the environment variables or model properties.timeout: (int, optional). Defaults to 10 seconds. The connection timeout in seconds for the client.

It first reloads the score.py file to conduct an introspection test by default. However, you can set ignore_introspection=False

to avoid the test. Introspection tests check if .deployment() later could have some issues and suggests necessary actions needed about how to fix them.

Deploy

PyTorchModel.deploy

The PyTorchModel.deploy method deploys the model to a remote endpoint. It uses the following parameters:

wait_for_completion: (bool, optional). Defaults toTrue. Set to wait for the deployment to complete before proceeding.display_name: (str, optional). Defaults toNone. The name of the model.description: (str, optional). Defaults toNone. The description of the model.deployment_instance_shape: (str, optional). Default toVM.Standard2.1. The shape of the instance used for deployment.deployment_instance_count: (int, optional). Defaults to 1. The number of instance used for deployment.deployment_bandwidth_mbps: (int, optional). Defaults to 10. The bandwidth limit on the load balancer in Mbps.deployment_log_group_id: (str, optional). Defaults toNone. The OCI logging group id. The access log and predict log share the same log group.deployment_access_log_id: (str, optional). Defaults toNone. The access log OCID for the access logs, see linkdeployment_predict_log_id: (str, optional). Defaults toNone. The predict log OCID for the predict logs, see link

kwargs includes the following parameters:

project_id: (str, optional). Project OCID. If not specified, the value is taken from the environment variables.compartment_id : (str, optional). Compartment OCID. If not specified, the value is taken from the environment variables.max_wait_time : (int, optional). Defaults to 1200 seconds. Maximum amount of time to wait in seconds. Negative implies an infinite wait time.poll_interval : (int, optional). Defaults to 60 seconds. Poll interval in seconds.

In order to make deployment more smooth, we suggest using exactly the same conda environments for both local development and deployment. Discrepancy between the two could cause problems.

You can pass in deployment_log_group_id, deployment_access_log_id and deployment_predict_log_id to enable the logging. Please refer to this logging example for an example on logging. To create a log group, you can reference Logging session.

Predict

PyTorchModel.predict

The PyTorchModel.predict method sends requests to the model deployment endpoint with data, and calls the predict function in the score.py. It takes one parameter:

data: Any. Data expected by the predict API in thescore.pyfile. For the PyTorch serialization method,datacan be in type dict, str, list, np.ndarray, ortorch.tensor. For the Onnx serialization method,datahas to be JSON serializable ornp.ndarray.

Delete_deployment

PyTorchModel.delete_deployment

The PyTorchModel.delete_deployment method deletes the current deployment endpoint that is attached to the model. It takes one parameter:

wait_for_completion: (bool, optional). Defaults toFalse. Whether to wait until completion.

Note that each time you call deploy, it creates a new deployment and only the new deployment is attached to this model.

from_model_artifact

.from_model_artifact() allows to load a model from a folder, zip or tar achive files, where the folder/zip/tar files should contain the files such as runtime.yaml, score.py, the serialized model file needed for deployments. It takes the following parameters:

uri: str: The folder path, ZIP file path, or TAR file path. It could contain a seriliazed model(required) as well as any files needed for deployment including: serialized model, runtime.yaml, score.py and etc. The content of the folder will be copied to theartifact_dirfolder.model_file_name: str: The serialized model file name.artifact_dir: str: The artifact directory to store the files needed for deployment.auth: (Dict, optional): Defaults to None. The default authetication is set usingads.set_authAPI. If you need to override the default, use the ads.common.auth.api_keys or ads.common.auth.resource_principal to create appropriate authentication signer and kwargs required to instantiate IdentityClient object.force_overwrite: (bool, optional): Defaults to False. Whether to overwrite existing files or not.properties: (ModelProperties, optional): Defaults to None. ModelProperties object required to save and deploy model.

After this is called, you can call .verify(), .save() and etc.

from_model_catalog

from_model_catalog allows to load a remote model from model catalog using a model id , which should contain the files such as runtime.yaml, score.py, the serialized model file needed for deployments. It takes the following parameters:

model_id: str. The model OCID.model_file_name: (str). The name of the serialized model.artifact_dir: str. The artifact directory to store the files needed for deployment. Will be created if not exists.auth: (Dict, optional). Defaults to None. The default authetication is set usingads.set_authAPI. If you need to override the default, use theads.common.auth.api_keysorads.common.auth.resource_principalto create appropriate authentication signer and kwargs required to instantiate IdentityClient object.force_overwrite: (bool, optional). Defaults to False. Whether to overwrite existing files or not.properties: (ModelProperties, optional). Defaults to None. ModelProperties object required to save and deploy model.

kwargs:

compartment_id : (str, optional). Compartment OCID. If not specified, the value will be taken from the environment variables.timeout : (int, optional). Defaults to 10 seconds. The connection timeout in seconds for the client.

Examples

First, create a PyTorch estimator and test data. Here test data is randomly generated as a toy example. In a real case, you can load a image file and transform to the required input size and input type.

import torch

import torchvision

torch_estimator = torchvision.models.resnet18(pretrained=True)

torch_estimator.eval()

# create fake test data

test_data = torch.randn(1, 3, 224, 224)

PyTorch Framework Serialization

from ads.model.framework.pytorch_model import PyTorchModel

import tempfile

artifact_dir = tempfile.mkdtemp()

torch_model = PyTorchModel(torch_estimator, artifact_dir=artifact_dir)

torch_model.prepare(inference_conda_env="generalml_p37_cpu_v1")

# Update ``score.py`` by constructing the model class instance first.

added_line = """

import torchvision

the_model = torchvision.models.resnet18()

"""

with open(artifact_dir + "/score.py", 'r+') as f:

content = f.read()

f.seek(0, 0)

f.write(added_line.rstrip('\r\n') + '\n' + content)

# continue to save and deploy the model.

torch_model.verify(test_data)

torch_model.save()

model_deployment = torch_model.deploy()

torch_model.predict(test_data)

torch_model.delete_deployment()

Onnx Serialization

from ads.model.framework.pytorch_model import PyTorchModel

import tempfile

torch_model = PyTorchModel(torch_estimator, artifact_dir=tempfile.mkdtemp())

torch_model.prepare(

inference_conda_env="generalml_p37_cpu_v1",

onnx_args=torch.randn(1, 3, 224, 224),

as_onnx=True

)

torch_model.verify(test_data.tolist())

torch_model.save()

model_deployment = torch_model.deploy()

torch_model.predict(test_data.tolist())

torch_model.delete_deployment()

Loading Model From a Zip Archive

model = PyTorchModel.from_model_artifact("/folder_to_your/artifact.zip",

model_file_name="your_model_file_name",

artifact_dir=tempfile.mkdtemp())

model.verify(your_data)

Loading Model From Model Catalog

model = PyTorchModel.from_model_catalog(model_id="ocid1.datasciencemodel.oc1.iad.amaaaa....",

model_file_name="your_model_file_name",

artifact_dir=tempfile.mkdtemp())

model.verify(your_data)